This article is Part 1 of a series on Apache Druid Query Performance.

- Apache Druid Query Performance Bottlenecks: A Q&A Guide

- The Foundations of Apache Druid Performance Tuning: Data & Segments (You are here)

- Apache Druid Advanced Data Modeling for Peak Performance

- Writing Performant Apache Druid Queries

- Apache Druid Cluster Tuning & Resource Management

- Apache Druid Query Performance Bottlenecks: Series Summary

Welcome to the first part of our deep dive into Apache Druid performance. The physical layout of data is the bedrock of Druid’s speed. How data is partitioned, stored, and organized into segments dictates the efficiency of every subsequent operation. The most common and severe performance issues originate from sub-optimal data layout, making this the first and most critical area to investigate and optimize.

My queries are incredibly slow, and the system schema reveals tens of thousands of tiny segments. Will compaction solve this, and why is it so important?

Source Discussion: A frequently encountered issue detailed on Stack Overflow.

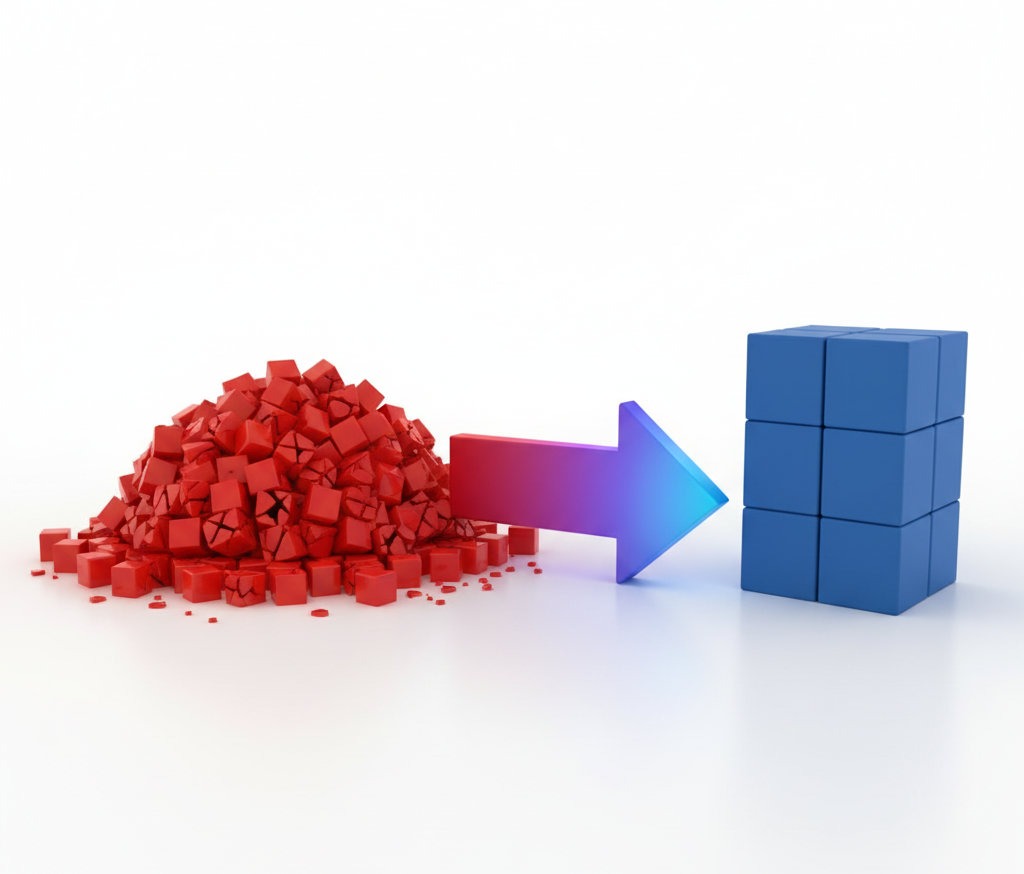

Answer: Yes, compaction is one of the most effective and critical solutions for this problem. A large number of small segments is a primary and severe cause of poor query performance in Druid. The architecture of Druid is designed for massively parallel processing, where queries are distributed across data servers (Historicals and Indexers) that process segments concurrently. However, this parallelism is not infinite, and every segment, regardless of its size, incurs a fixed scheduling and processing overhead. When a query’s time interval covers thousands or tens of thousands of tiny segments, this overhead accumulates and can overwhelm the system.

The query lifecycle in this scenario reveals several sources of inefficiency. First, the Broker must identify every single segment relevant to the query’s time interval from the metadata store. It then fans out sub-queries to all the data nodes holding those segments. On each data node, a processing thread must be scheduled from a fixed-size pool (defined by druid.processing.numThreads) to handle each segment. This involves opening file handles, mapping the segment data into memory, and performing the actual scan. With a proliferation of small segments, the system spends a disproportionate amount of time managing these micro-tasks rather than executing meaningful computational work. This leads to tasks waiting in the processing queue (observable via the segment/scan/pending metric) and a high query/wait/time, indicating that tasks are bottlenecked by resource scheduling, not the work itself.

Compaction directly addresses this by functioning as a background re-indexing process. It merges numerous small segments into a smaller number of larger, more optimized ones. This fundamental change offers several benefits:

- Reduced Overhead: The per-segment scheduling and I/O overhead is drastically lowered. A query that previously had to orchestrate 10,000 segment scans might now only need to manage 20.

- Efficient Resource Utilization: Each thread in the processing pool now operates on a much larger, more significant chunk of data (ideally millions of rows), making far more efficient use of CPU cycles.

- Improved Data Locality: Compaction can also improve data locality and the effectiveness of rollup, further reducing the total data size.

This issue often arises from a fundamental tension in real-time ingestion pipelines. To make data available for querying with minimal latency, streaming ingestion tasks (Peons) frequently persist their in-memory buffers to small, immutable segments on disk. This is by design. However, without a corresponding data lifecycle management strategy, this continuous creation of small segments leads to “segment proliferation.” Therefore, slow queries on recently ingested data are often not a query-time problem at all, but a symptom of an ingestion strategy that is not balanced with a robust, always-on compaction strategy. Compaction should not be viewed as an occasional cleanup task but as an integral, non-negotiable component of any Apache Druid Ingestion pipeline. Failing to configure it properly is a design flaw that guarantees future performance degradation.

What are the ideal segment size settings? The documentation mentions 5 million rows and 300-700MB. Which one should I prioritize?

Source Discussion: A direct follow-up based on official recommendations and community experience.

Answer: Prioritize the number of rows per segment, targeting approximately 5 million rows. The segment byte size is a useful secondary heuristic but should not be the primary target. The reasoning behind this prioritization lies in how Druid executes queries and manages work. Each segment is a fundamental unit of parallel processing, and a single thread from a data node’s processing pool is assigned to scan one segment at a time. Therefore, the number of rows in a segment directly correlates to the amount of computational work that a single thread will perform. By targeting a consistent row count across all segments, you ensure a balanced and predictable distribution of work across the cluster’s processing threads. This maximizes the effectiveness of parallelism and makes query performance more consistent.

The 300-700 MB recommendation is a general guideline that often aligns with the 5 million row target for datasets with typical schema complexity and data types. However, this correlation can easily break down. For example:

- A datasource with heavy rollup and many low-cardinality string columns will have a very small average row size. A 500 MB segment might contain 15 million rows, which could be too much work for a single thread, potentially causing that task to become a straggler and delay the entire query.

- Conversely, a datasource with no rollup, high-cardinality string columns, and complex types (like sketches) will have a large average row size. A 500 MB segment might only contain 500,000 rows, leading back to the problem of excessive per-segment overhead.

The 5 million row target represents a “sweet spot” that balances the macro-level overhead of segment management with the micro-level goal of maximizing CPU cache hits during the core computational loops.

How does segmentGranularity (e.g., DAY, HOUR) affect performance and storage?

Answer: segmentGranularity is a critical ingestion-time setting that defines the time window for which segments are created. It directly impacts time-based partitioning, data locality, and query performance.

- Performance Impact: When you query Druid, the Broker first uses the query’s time interval to prune the list of segments to scan. A finer

segmentGranularity(like HOUR) creates more segments for a given period than a coarser one (like DAY).- Queries over short intervals: A finer granularity can be faster. A query for 30 minutes of data with HOUR granularity will only need to scan segments for that single hour. With DAY granularity, it would have to scan the entire day’s segment.

- Queries over long intervals: A coarser granularity is often better. A query for 90 days of data with DAY granularity will scan ~90 segments. With HOUR granularity, it would need to scan 90 * 24 = 2160 segments, introducing significant overhead.

- Storage and Ingestion Impact:

segmentGranularityalso influences how data is handed off from real-time ingestion tasks. A real-time task works on one segment for a given granularity period at a time. A DAY granularity means a single task will hold all data for that day until the segment is published. This can increase memory pressure on Middle Managers for high-volume streams. Using HOUR granularity allows tasks to hand off smaller segments more frequently.

Best Practice: Choose a segmentGranularity that aligns with your most common query patterns. If most queries are over a few hours, HOUR is a good choice. If they typically span multiple days or weeks, DAY is more appropriate.

Besides merging small segments, what other benefits does compaction offer?

Answer: While merging small segments is the primary reason for compaction, it offers several other powerful optimization capabilities:

- Re-partitioning: Compaction can change the secondary partitioning scheme within a time chunk. You can add or change a

partitionedByorclusteredByclause to sort data by a frequently filtered dimension, which dramatically improves data locality and filter performance. - Schema Changes: Compaction can be used to apply certain schema changes to existing data without a full re-ingestion. This includes adding new filtered dimensions or changing the metric definitions (e.g., switching from an approximate

hyperUniqueto a more accurate HLLSketch). - Further Rollup: If your initial ingestion had a fine

queryGranularity(e.g., MINUTE), you can run a compaction job with a coarserqueryGranularity(e.g., HOUR) to further roll up the data, reducing storage size and speeding up queries over the historical data.

Compaction is not just a cleanup tool; it’s a powerful data lifecycle management and optimization feature that allows you to evolve your data’s physical layout as your query patterns change.