This article is part of a series on deploying a production‑ready Apache Druid cluster on Kubernetes. In Part 3, we cover the complete installation and configuration of a production‑grade cluster, building on the foundation and preparation covered previously. See Infrastructure Setup for Enterprise Apache Druid on Kubernetes – Building the Foundation and Installing a Production-Ready Apache Druid Cluster on Kubernetes — Part 2: Druid Deployment Preparation. For conversational assistance, see Apache Druid MCP Server: Conversational AI for Time Series.

In this series on Apache Druid on Kubernetes

- Infrastructure Setup for Enterprise Apache Druid on Kubernetes – Building the Foundation

- Installing a Production-Ready Apache Druid Cluster on Kubernetes — Part 2: Druid Deployment Preparation

- Apache Druid on Kubernetes: Production-ready with TLS, MM‑less, Zookeeper‑less, GitOps

- Apache Druid Security on Kubernetes: Authentication & Authorization with OIDC (PAC4J), RBAC, and Azure AD

Related project: Apache Druid MCP Server: Conversational AI for Time Series

If you’re planning or running Apache Druid in production, we offer pragmatic Apache Druid consulting and support for time‑series projects—from architecture reviews and Kubernetes‑native deployments to performance tuning and production runbooks. If expert guidance would accelerate your roadmap, feel free to reach out.

Introduction

After laying the infrastructure groundwork and preparing your deployment environment, it’s time for the main event: deploying your production-ready Apache Druid cluster on Kubernetes. This isn’t just about applying a few YAML files—we’re talking about configuring a sophisticated, distributed analytics engine that can handle enterprise-scale workloads.

Modern time series applications demand more than basic Druid setups. They require carefully tuned configurations, proper resource allocation, and enterprise-grade security implementations. The traditional approach of manually managing MiddleManager components is giving way to Kubernetes-native architectures that leverage the platform’s inherent capabilities.

This guide walks you through the complete installation process, from understanding the DruidCluster custom resource to implementing MM-less deployments and Zookeeper-less service discovery. We’ll cover everything from component-specific configurations to production deployment patterns, ensuring your Druid installation is ready for real-world enterprise demands.

Table of contents

- Introduction

- Understanding the DruidCluster Custom Resource

- Common Configuration Settings

- Coordinator Component Configuration

- Overlord Component Configuration

- Broker Component Configuration

- Historical Component

- Kubernetes-Native Task Execution

- Production Deployment Best Practices

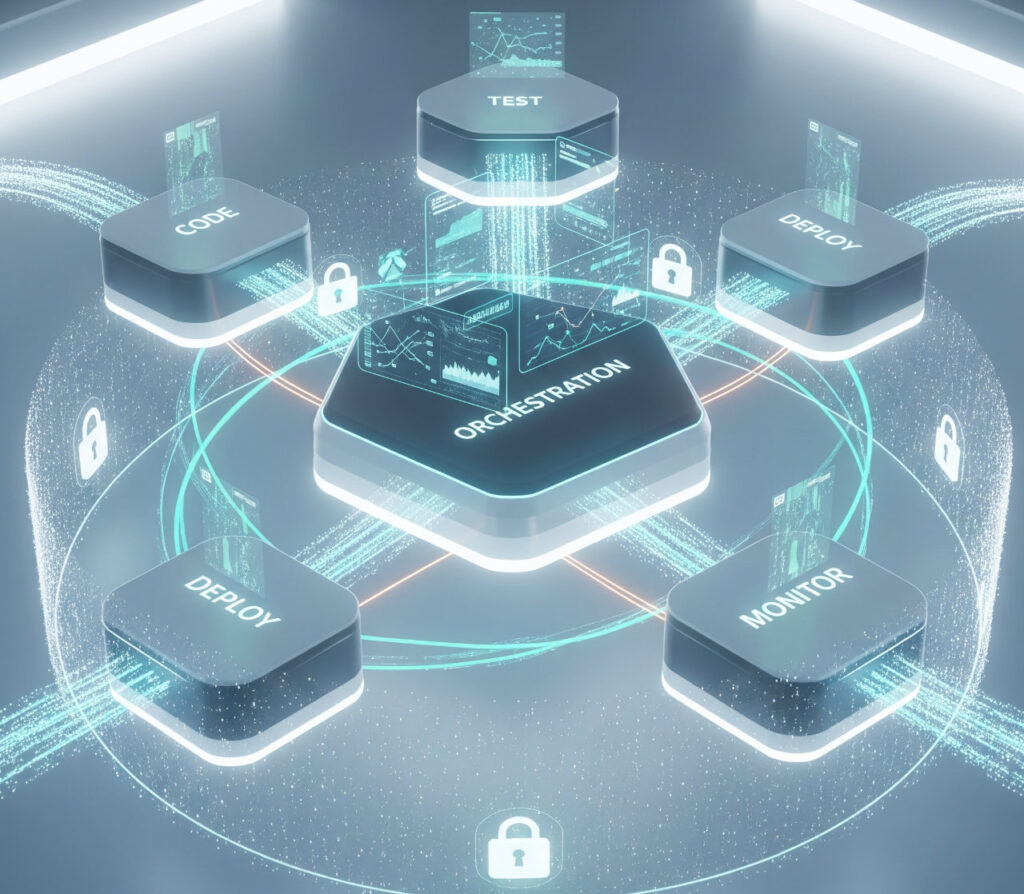

- Integration with GitOps Workflow

- Deployment Patterns Comparison

- Frequently Asked Questions

- Conclusion

Understanding the DruidCluster Custom Resource

The heart of your Druid installation lies within the DruidCluster custom resource, which acts as the declarative blueprint for your entire cluster. This isn’t your typical Kubernetes deployment—it’s a sophisticated orchestration mechanism that manages multiple interconnected services with complex dependencies.

Let’s examine the foundational configuration that brings together all the infrastructure components we prepared in earlier parts:

apiVersion: "druid.apache.org/v1alpha1"

kind: "Druid"

metadata:

name: iuneradruid

namespace: druid

spec:

commonConfigMountPath: /opt/druid/conf/druid/cluster/_common

rollingDeploy: false

startScript: /druid.sh

image: iunera/druid:29.0.1

imagePullPolicy: Always

podAnnotations:

reason: this-cluster-is-k8s-discovery

podLabels:

app.kubernetes.io/networkpolicy-group: druid

securityContext:

fsGroup: 1000

runAsUser: 1000

runAsGroup: 1000This configuration establishes several critical principles. The rollingDeploy: false setting ensures controlled deployments—crucial for production environments where you need predictable update behavior. The custom image specification allows for enterprise-specific extensions and security patches.

The security context configuration follows the principle of least privilege by running processes as non-root user 1000. This approach significantly reduces the attack surface while maintaining compatibility with persistent volume requirements.

Common Configuration Settings

Druid Extensions Load List

The Druid cluster (from iuneradruid-cluster.yaml line 65) enables a curated set of extensions that power security, ingestion, storage backends, query engines, and Kubernetes-native operation. These are declared centrally so that every service loads the same capabilities at startup:

# common.runtime.properties druid.extensions.loadList=["iu-code-ingestion-druid-extension", "druid-pac4j","druid-basic-security", "simple-client-sslcontext", "druid-multi-stage-query", "druid-distinctcount", "druid-s3-extensions", "druid-stats", "druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service", "druid-time-min-max", "druid-kubernetes-overlord-extensions", "druid-kubernetes-extensions"]

What each extension does and why it matters for sizing:

- iu-code-ingestion-druid-extension to add custom pre-ingestion parsers for Apache Druid: Custom ingestion logic packaged into the image. Ensure it’s present in the container; resource use depends on what it implements.

- druid-pac4j: Enables web SSO/OIDC authentication for human users.

- druid-basic-security: Provides internal basic auth/authz (metadata-backed) and the escalator; required for service-to-service auth.

- simple-client-sslcontext: Supplies an SSLContext for clients using the provided keystores/truststores; required for TLS-enabled outbound HTTP.

- druid-multi-stage-query: Activates the Multi-Stage Query (MSQ) engine used for parallel SQL-based ingestion/queries. Can increase Broker and task memory/CPU during large jobs; plan heap and direct memory accordingly.

- druid-distinctcount: Adds approximate distinct-count aggregators. Can use off-heap memory; size processing/direct buffers to match workload.

- druid-s3-extensions: Enables S3-compatible deep storage and indexer logs.

- druid-stats: Statistical aggregators (e.g., variance/stddev) used in ingestion/queries.

- druid-histogram: Histogram/approximation aggregators. May increase memory during heavy aggregations.

- druid-datasketches: Apache DataSketches (HLL, quantiles, theta) for approximate analytics. Influences processing memory; tune direct memory on Brokers/Tasks.

- druid-lookups-cached-global: Global cached lookups for fast dimension enrichment. Consumes heap on Brokers/Historicals; account for cache size.

- postgresql-metadata-storage: PostgreSQL connector for the Druid metadata store. Required when using Postgres;

- druid-kafka-indexing-service: Kafka ingestion (supervisor/task). Affects Overlord and task pods; Configure network policies to permit access.

- druid-time-min-max: Min/Max time aggregators and utilities used in queries/optimizations.

- druid-kubernetes-overlord-extensions: Kubernetes runner for MM-less tasks (K8s Jobs). Essential for k8s-native task execution and to reach proper autoscaling.

- druid-kubernetes-extensions: Kubernetes-native service discovery (no Zookeeper). Required for the Zookeeper-less setup.

Operational notes:

- All extensions must be available under the configured directory (

druid.extensions.directory=/opt/druid/extensions) in the image. - Security/TLS-related extensions rely on the keystores mounted at

/druid/jksand the TLS settings in common.runtime.properties. - Sketch/histogram extensions primarily impact processing memory; ensure

-XX:MaxDirectMemorySizeanddruid.processing.buffer.sizeBytesare sized for your query patterns.

TLS Configuration

Enterprise deployments demand encryption at rest and in transit. The configuration integrates the TLS keystores we prepared in Part 2:

volumeMounts:

- name: keystores

mountPath: /druid/jks

readOnly: true

volumes:

- name: keystores

secret:

secretName: iuneradruid-jks-keystores-secret

env:

- name: druid_server_https_keyStorePassword

valueFrom:

secretKeyRef:

name: iuneradruid-jks-keystores-secret

key: keystorepasswordThe TLS configuration enables secure communication between all Druid components:

common.runtime.properties: | # global TLS settings druid.enableTlsPort=true # Disable non-TLS Ports druid.enablePlaintextPort=false # client side TLS settings druid.client.https.protocol=TLSv1.2 druid.client.https.trustStoreType=jks druid.client.https.trustStorePath=/druid/jks/truststore.jks druid.client.https.trustStorePassword=changeit druid.client.https.validateHostnames=false # server side TLS settings druid.server.https.keyStoreType=jks druid.server.https.keyStorePath=/druid/jks/keystore.jks druid.server.https.certAlias=druid druid.server.https.trustStoreType=jks druid.server.https.trustStorePath=/druid/jks/truststore.jks druid.server.https.trustStorePassword=changeit druid.server.https.validateHostnames=false druid.server.https.protocol=TLSv1.2 druid.server.https.includeCipherSuites=["TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256"]

This configuration disables plain-text communication entirely, ensuring all inter-component communication is encrypted using TLS 1.2 with enterprise-grade cipher suites.

- Because

druid.enablePlaintextPort=false, all component listeners run over HTTPS on their configured ports. - Component TLS ports:

- Broker: 8282

- Coordinator: 8281

- Historical: 8283

- Overlord: 8290

- Router: 9088

- Health probes and Services must use

scheme: HTTPSfor these endpoints.

Authentication / Authorization

Secure, multi-tenant clusters use a dual authenticator chain with RBAC-backed authorization and an internal escalator account for service-to-service calls (from iuneradruid-cluster.yaml) to ensure that the cluster still works even if other authentication backends are down.

common.runtime.properties: | # Authentication chain: internal basic auth first, then OIDC (pac4j) druid.auth.authenticatorChain=["db","pac4j"] # pac4j (OIDC) authenticator for human users druid.auth.authenticator.pac4j.name=pac4j druid.auth.authenticator.pac4j.type=pac4j druid.auth.authenticator.pac4j.authorizerName=pac4j druid.auth.pac4j.oidc.scope=openid profile email # druid.auth.pac4j.oidc.clientID=ENV_VAR_FROM_SECRET # druid.auth.pac4j.oidc.clientSecret=ENV_VAR_FROM_SECRET # druid.auth.pac4j.oidc.discoveryURI=ENV_VAR_FROM_SECRET # druid.auth.pac4j.cookiePassphrase=ENV_VAR_FROM_SECRET # Basic authenticator backed by metadata store for internal/service users druid.auth.authenticator.db.type=basic druid.auth.authenticator.db.skipOnFailure=true druid.auth.authenticator.db.credentialsValidator.type=metadata druid.auth.authenticator.db.authorizerName=db # druid.auth.authenticator.db.initialAdminPassword=ENV_VAR_FROM_SECRET # druid.auth.authenticator.db.initialInternalClientPassword=ENV_VAR_FROM_SECRET # Authorizers druid.auth.authorizers=["db", "pac4j"] druid.auth.authorizer.db.type=basic druid.auth.authorizer.db.roleProvider.type=metadata druid.auth.authorizer.pac4j.type=allowAll # Escalator (cluster-internal authorization) druid.escalator.type=basic druid.escalator.authorizerName=db # druid.escalator.internalClientUsername=ENV_VAR_FROM_SECRET # druid.escalator.internalClientPassword=ENV_VAR_FROM_SECRET

The corresponding Secrets are wired through environment variables:

env:

# Druid Basic-Auth

- name: druid_auth_authenticator_db_initialAdminPassword

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: initialAdminPassword

- name: druid_escalator_internalClientUsername

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: internalUser

- name: druid_auth_authenticator_db_initialInternalClientPassword

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: internalClientPassword

- name: druid_escalator_internalClientPassword

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: internalClientPassword

# OIDC (pac4j) secrets

- name: druid_auth_pac4j_oidc_clientID

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: pac4j-clientID

- name: druid_auth_pac4j_oidc_clientSecret

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: pac4j-clientSecret

- name: druid_auth_pac4j_oidc_discoveryURI

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: pac4j-discoveryURI

- name: druid_auth_pac4j_cookiePassphrase

valueFrom:

secretKeyRef:

name: iuneradruid-auth-secrets

key: pac4j-cookiePassphraseNote: Using pac4j for authentication is optional. In the current phase of the project, pac4j is used for authentication and not authorization. Authorization is currently not possible with pac4j.

S3 Deep Storage and Indexing Logs

Druid stores immutable segments and indexing task logs in S3. The following cluster-wide settings belong in common.runtime.properties and apply to all components:

common.runtime.properties: | # Deep Storage (S3) druid.storage.type=s3 druid.storage.bucket=<bucket ID> druid.storage.baseKey=deepstorage # Indexer task logs (S3) druid.indexer.logs.type=s3 druid.indexer.logs.s3Bucket=<bucket ID> druid.indexer.logs.s3Prefix=indexlogs

- Buckets and prefixes should exist and be lifecycle-managed according to your retention and cost policies.

- For S3 connectivity, provide credentials via your cloud-native mechanism (IRSA/role binding) or environment variables/secrets.

Zookeeper-less Installation (Kubernetes-Native Service Discovery)

Kubernetes-native discovery removes Zookeeper dependency and uses the Kubernetes API for service discovery. Traditional Druid deployments depend on Zookeeper for service discovery and coordination. While Zookeeper is battle-tested, it introduces additional complexity and operational overhead in Kubernetes environments. Modern Druid supports Kubernetes-native service discovery that leverages the platform’s built-in capabilities.

common.runtime.properties: | druid.zk.service.enabled=false druid.serverview.type=http druid.discovery.type=k8s druid.discovery.k8s.clusterIdentifier=iuneradruid druid.discovery.k8s.leaseDuration=PT60S druid.discovery.k8s.renewDeadline=PT17S druid.discovery.k8s.retryPeriod=PT5S

This configuration enables Druid components to discover each other through Kubernetes APIs, using the pods annotation for the component state (e.g. isLeader) and pod labels for cluster component identification.

Prerequisites

- Druid Kubernetes extension loaded in all services:

common.runtime.properties: | druid.extensions.loadList=["...", "druid-kubernetes-extensions"]

Dedicated ServiceAccount for Druid pods and task pods. Ensure the operator runs Druid pods with this ServiceAccount (typically serviceAccountName under the pod template/spec). Update your task template to use the same account:

apiVersion: v1

kind: ConfigMap

metadata:

name: default-task-template

namespace: druid

data:

default-task-template.yaml: |+

apiVersion: v1

kind: PodTemplate

template:

spec:

serviceAccount: druid

restartPolicy: NeverRBAC Requirements for Service Discovery

Kubernetes service discovery requires appropriate RBAC permissions. The following configuration grants necessary access:

apiVersion: v1 kind: ServiceAccount metadata: name: druid namespace: druid --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: druid-k8s-discovery namespace: druid rules: - apiGroups: [""] resources: ["pods","endpoints","services"] verbs: ["get","list","watch"] - apiGroups: ["coordination.k8s.io"] resources: ["leases"] verbs: ["get","list","watch","create","update","patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: druid-k8s-discovery namespace: druid subjects: - kind: ServiceAccount name: druid namespace: druid roleRef: kind: Role name: druid-k8s-discovery apiGroup: rbac.authorization.k8s.io

This configuration follows a least-privilege model scoped to the namespace. Broaden permissions only if your custom templates or operational needs require additional resources.

Service Discovery Performance Optimizations

leaseDuration(PT60S): How long a component holds its discovery leaserenewDeadline(PT17S): Maximum time to renew lease before expirationretryPeriod(PT5S): Interval between renewal attempts

These timings balance quick failure detection with reduced API server load. Shorter intervals improve recovery times but increase Kubernetes API traffic. These settings are tested with an AWS EKS control plane.

Comparison: Zookeeper vs Kubernetes Service Discovery

| Feature | Zookeeper | Kubernetes Native |

|---|---|---|

| Operational Complexity | Requires separate Zookeeper cluster | Uses existing Kubernetes infrastructure |

| High Availability | Requires 3+ Zookeeper nodes | Leverages Kubernetes control plane HA |

| Network Dependencies | Additional network connections | Uses cluster networking |

| Monitoring | Separate Zookeeper monitoring | Integrated Kubernetes monitoring |

| Security | Separate security configuration | Inherits Kubernetes RBAC |

| Backup/Recovery | Zookeeper-specific procedures | Kubernetes-native backup strategies |

| Scalability | Zookeeper cluster limitations | Scales with Kubernetes control plane |

Coordinator Component Configuration

The Coordinator manages segment lifecycle, tier balancing, and retention enforcement. The configuration below mirrors iuneradruid-cluster.yaml and is adapted for TLS-only clusters:

coordinator:

kind: StatefulSet

druid.port: 8281

maxSurge: 2

maxUnavailable: 0

nodeType: coordinator

livenessProbe:

initialDelaySeconds: 10

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /status/health

port: 8281

scheme: HTTPS

readinessProbe:

initialDelaySeconds: 10

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /status/health

port: 8281

scheme: HTTPS

runtime.properties: |

druid.service=druid/coordinator

druid.log4j2.sourceCategory=druid/coordinator

druid.coordinator.balancer.strategy=cachingCost

druid.serverview.type=http

druid.indexer.storage.type=metadata

druid.coordinator.startDelay=PT10S

druid.coordinator.period=PT5S

# Run the overlord service in the coordinator process

druid.coordinator.asOverlord.enabled=false- TLS health probes: Use scheme HTTPS on port 8281 because plaintext ports are disabled cluster‑wide.

- Balancer strategy:

cachingCostis a production‑friendly default; alternatives includecostorsegmentSize. - Start delay and period:

PT10SandPT5Sallow quick reaction to rule changes; increase intervals on very large clusters to reduce churn. - Separate Overlord:

druid.coordinator.asOverlord.enabled=falseruns Overlord separately, which matches the Kubernetes‑native, MM‑less task execution model. - Rollout safety:

maxSurge: 2andmaxUnavailable: 0enable zero‑downtime rolling updates for the Coordinator.

Overlord Component Configuration

We run Druid in MM-less mode: no MiddleManagers are deployed. Instead, the Overlord uses the Kubernetes task runner to launch each indexing task as its own Kubernetes Pod/Job, which improves isolation, right-sizes resources per task, and supports native autoscaling (as long your cluster is autoscaling). For the full configuration, templates, and operational guidance, see Kubernetes-Native Task Execution.

overlord:

nodeType: "overlord"

druid.port: 8290

livenessProbe:

httpGet:

path: /status/health

port: 8290

scheme: HTTPS

readinessProbe:

httpGet:

path: /status/health

port: 8290

scheme: HTTPS

runtime.properties: |

druid.service=druid/overlord

druid.indexer.queue.startDelay=PT5S

druid.indexer.fork.property.druid.processing.intermediaryData.storage.type=deepstore

druid.indexer.storage.type=metadata

druid.indexer.runner.namespace=druid

druid.indexer.task.encapsulatedTask=true

druid.indexer.runner.type=k8s

druid.indexer.queue.maxSize=30

druid.processing.intermediaryData.storage.type=deepstore

druid.indexer.runner.k8s.adapter.type=customTemplateAdapter

druid.indexer.runner.k8s.podTemplate.base=/druid/tasktemplate/default/default-task-template.yaml

druid.indexer.runner.primaryContainerName=main

druid.indexer.runner.debugJobs=false

druid.indexer.runner.maxTaskDuration=PT4H

druid.indexer.runner.taskCleanupDelay=PT2H

druid.indexer.runner.taskCleanupInterval=PT10M

druid.indexer.runner.K8sjobLaunchTimeout=PT1H

druid.indexer.runner.labels={"app.kubernetes.io/networkpolicy-group":"druid"}

druid.indexer.runner.graceTerminationPeriodSeconds=PT30S

# showing task for 4 Weeks in WebUI

druid.indexer.storage.recentlyFinishedThreshold=P4W

volumeMounts:

- name: default-task-template

mountPath: /druid/tasktemplate/default/

volumes:

- name: default-task-template

configMap:

name: default-task-template- TLS health probes: Use scheme HTTPS on port 8290; plaintext ports are disabled cluster‑wide.

- Kubernetes‑native runner:

runner.type=k8swithencapsulatedTask=trueandcustomTemplateAdapterusing the pod template at/druid/tasktemplate/default/default-task-template.yaml. - Queue and cleanup tuning:

queue.startDelay=PT5S,queue.maxSize=30to bound concurrency; cleanup delay/intervals keep the namespace clean;K8sjobLaunchTimeoutguards stuck submissions. - Intermediary deepstore: intermediaryData.storage.type=deepstore aligns with the mounted deep storage.

- Volumes: default-task-template ConfigMap mount is required for pod templating.

Broker Component Configuration

The Broker handles query routing and result merging, and it is both CPU and memory intensive. The configuration below mirrors iuneradruid-cluster.yaml and is adapted for TLS-only clusters:

broker:

kind: StatefulSet

replicas: 1

maxSurge: 1

maxUnavailable: 0

druid.port: 8282

nodeType: broker

readinessProbe:

initialDelaySeconds: 60

periodSeconds: 10

failureThreshold: 30

httpGet:

path: /druid/broker/v1/readiness

port: 8282

scheme: HTTPS

runtime.properties: |

druid.service=druid/broker

# HTTP server settings

druid.server.http.numThreads=60

# HTTP client settings

druid.broker.http.numConnections=50

druid.broker.http.maxQueuedBytes=10MiB

# Processing threads and buffers

druid.processing.buffer.sizeBytes=1536MiB

druid.processing.numMergeBuffers=6

druid.processing.numThreads=1

druid.query.groupBy.maxOnDiskStorage=2

druid.query.groupBy.maxMergingDictionarySize=300000000

# Query cache

druid.broker.cache.useCache=true

druid.broker.cache.populateCache=true- TLS readiness: Use scheme HTTPS on port 8282 because plaintext ports are disabled cluster‑wide.

- Processing buffers/threads: 1536MiB buffers, 6 merge buffers, and 1 processing thread align with the reference sizing; adjust for high concurrency or large groupBy queries.

- HTTP tuning: server numThreads=60; client connections=50 and maxQueuedBytes=10MiB balance throughput and backpressure.

- Caching: Broker cache

useCache=trueandpopulateCache=true; validate hit rates and memory impact for your workloads. - Rollout safety:

maxSurge: 1andmaxUnavailable: 0enable safe rolling updates for Brokers.

Historical Component

Historical nodes serve as the data storage workhorses and must be sized for high segment cache throughput and long startup times while segments are loaded. The configuration below mirrors iuneradruid-cluster.yaml and is adapted for TLS-only clusters:

historical:

druid.port: 8283

kind: StatefulSet

nodeType: historical

nodeConfigMountPath: /opt/druid/conf/druid/cluster/data/historical

livenessProbe:

initialDelaySeconds: 1800

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /status/health

port: 8283

scheme: HTTPS

readinessProbe:

httpGet:

path: /druid/historical/v1/readiness

port: 8283

scheme: HTTPS

periodSeconds: 10

failureThreshold: 18

runtime.properties: |

druid.service=druid/historical

druid.log4j2.sourceCategory=druid/historical

druid.server.http.numThreads=60

# Processing threads and buffers

druid.processing.buffer.sizeBytes=500MiB

druid.query.groupBy.maxOnDiskStorage=10000000000

druid.processing.numMergeBuffers=4

druid.processing.numThreads=15

druid.processing.tmpDir=var/druid/processing

# Segment storage

druid.segmentCache.locations=[{"path":"/var/druid/segment-cache","maxSize":"200g"}]

# Query cache

druid.historical.cache.useCache=true

druid.historical.cache.populateCache=true

druid.cache.type=caffeine

druid.cache.sizeInBytes=256MiB- TLS health/readiness probes: Use scheme HTTPS on port 8283; plaintext ports are disabled cluster‑wide.

- Long liveness delay:

initialDelaySeconds: 1800accounts for potentially long segment loading on startup to avoid premature restarts. - Readiness endpoint:

/druid/historical/v1/readinessensures the node only serves queries after segments are loaded and announced. - Processing and spilling: 500MiB processing buffers with groupBy on‑disk spill prevent OOMs on large groupBy queries; 4 merge buffers and 15 processing threads align with reference sizing.

- Segment cache:

segmentCache.locationsat/var/druid/segment-cachewith maxSize 200g; size underlying storage accordingly.

Kubernetes-Native Task Execution

The Evolution Beyond MiddleManager

Traditional Druid deployments relied on MiddleManager processes to spawn and manage indexing tasks. This approach worked well in simpler environments but created several challenges in Kubernetes: resource waste from always-running MiddleManager pods, complex resource allocation, and limited isolation between tasks.

The MM-less architecture leverages Kubernetes’ native job scheduling capabilities, treating each indexing task as a Kubernetes pod. This approach provides better resource utilization, stronger isolation, and simplified scaling.

Task Runner Configuration

The Overlord component orchestrates task execution through Kubernetes APIs instead of managing MiddleManager processes:

overlord:

nodeType: "overlord"

replicas: 1

runtime.properties: |

druid.indexer.runner.type=k8s

druid.indexer.runner.namespace=druid

druid.indexer.task.encapsulatedTask=true

druid.indexer.runner.k8s.adapter.type=customTemplateAdapter

druid.indexer.runner.k8s.podTemplate.base=/druid/tasktemplate/default/default-task-template.yaml

druid.indexer.runner.primaryContainerName=mainThe druid.indexer.runner.type=k8s configuration switches from traditional MiddleManager-based task execution to Kubernetes-native pods. Each indexing task becomes an independent pod with its own resource allocation and lifecycle.

Task Template Configuration

The task template defines how indexing pods are created. This ConfigMap-based approach provides flexibility and version control:

apiVersion: v1

kind: ConfigMap

metadata:

name: default-task-template

namespace: druid

data:

default-task-template.yaml: |+

apiVersion: v1

kind: PodTemplate

template:

spec:

restartPolicy: Never

serviceAccount: default

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsUser: 1000

containers:

- name: main

image: iunera/druid:29.0.1

command: [sh, -c]

args: ["/peon.sh /druid/data 1 --loadBroadcastSegments true"]Advanced Task Management Configuration

The MM-less setup includes sophisticated task lifecycle management:

druid.indexer.runner.maxTaskDuration=PT4H druid.indexer.runner.taskCleanupDelay=PT2H druid.indexer.runner.taskCleanupInterval=PT10M druid.indexer.runner.K8sjobLaunchTimeout=PT1H druid.indexer.runner.graceTerminationPeriodSeconds=PT30S

These settings ensure that long-running tasks don’t consume resources indefinitely while providing sufficient time for complex indexing operations to complete. The cleanup intervals prevent accumulation of completed task pods.

Benefits Comparison: MiddleManager vs MM-less

| Aspect | MiddleManager | MM-less (Kubernetes-native) |

|---|---|---|

| Resource Efficiency | Always-running MM pods consume resources | Dynamic pod creation only when needed |

| Isolation | Tasks share MM process space | Each task gets isolated pod |

| Scaling | Manual MM replica scaling required | Automatic scaling based on task queue |

| Monitoring | Complex multi-level monitoring | Direct pod-level monitoring |

| Resource Allocation | Fixed MM resources split between tasks | Dedicated resources per task |

| Failure Recovery | MM failure affects multiple tasks | Task failures are isolated |

| Kubernetes Integration | Limited integration | Native Kubernetes scheduling |

Production Deployment Best Practices

Enterprise Druid deployments require production-grade strategies that work consistently across dev → staging → prod while remaining auditable and reversible. Building on the GitOps workflow from Part 2, we recommend a small set of guardrails and patterns that you can apply regardless of cluster size:

- Environment strategy and promotion: Use Kustomize overlays per environment (dev, staging, prod) with clear promotion via Git PRs/tags. Keep environment deltas as patches, not forks.

- Version pinning and immutability: Pin chart/app versions and container images by digest; use semantic versioning and Git tags for releases. Record change windows in Git history.

- Progressive delivery: Start with blue‑green and optionally add canary for high‑risk changes; ensure fast, deterministic rollback paths.

- High‑availability guardrails: Define PodDisruptionBudgets (PDBs), readiness/liveness probes, maxSurge/maxUnavailable, anti‑affinity/topologySpreadConstraints.

- Autoscaling behavior: Tune HPA thresholds and scale-down policies; coordinate with Cluster Autoscaler capacity and set resource requests/limits to avoid CPU throttling.

- Observability and SLOs: Standardize health endpoints, dashboards, and alerts for query latency, task success rate, segment loading, and JVM/OS resources.

- Security by default: Least‑privilege ServiceAccounts and RBAC, NetworkPolicies, TLS everywhere with keystore rotation, image provenance/scanning, and secrets from sealed/encrypted sources.

- Data protection: Scheduled metadata backups, deep storage replication, periodic restore drills, and backup verification jobs.

- Cost and governance: Quotas and budgets per environment/tenant, cache sizing policies, and right‑sizing.

- GitOps workflow integration: PR‑based changes, policy-as-code validation, drift detection, and manual approvals for production.

Recommended GitOps layout with Kustomize overlays:

druid/

base/

kustomization.yaml

druidcluster.yaml

overlays/

dev/

kustomization.yaml

patches.yaml

staging/

kustomization.yaml

patches.yaml

prod/

kustomization.yaml

patches.yamlBlue-Green Deployment Implementation

Blue-green deployments provide zero-downtime updates for critical Druid clusters:

# Blue deployment

metadata:

name: iuneradruid-blue

labels:

deployment: blue

app.kubernetes.io/version: "29.0.1"

---

# Green deployment

metadata:

name: iuneradruid-green

labels:

deployment: green

app.kubernetes.io/version: "29.0.2"Service selectors can be updated to switch traffic between blue and green deployments, enabling instant rollbacks if issues arise.

Capacity Planning and Resource Allocation

Production Druid clusters require careful capacity planning based on expected workloads and growth projections.

Component Resource Recommendations

| Component | Small Cluster | Medium Cluster | Large Cluster |

|---|---|---|---|

| Broker | 8Gi memory, 2 CPU | 16Gi memory, 4 CPU | 32Gi memory, 8 CPU |

| Historical | 16Gi memory, 2 CPU | 32Gi memory, 4 CPU | 64Gi memory, 8 CPU |

| Coordinator | 4Gi memory, 1 CPU | 8Gi memory, 2 CPU | 16Gi memory, 4 CPU |

| Overlord | 2Gi memory, 1 CPU | 4Gi memory, 2 CPU | 8Gi memory, 4 CPU |

Health Checks and Monitoring Implementation

Production deployments require comprehensive health monitoring beyond basic liveness probes:

readinessProbe:

initialDelaySeconds: 60

periodSeconds: 10

failureThreshold: 30

httpGet:

path: /druid/broker/v1/readiness

port: 8282

scheme: HTTPS

livenessProbe:

initialDelaySeconds: 1800

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /status/health

port: 8283

scheme: HTTPSThe extended initialDelaySeconds for Historical nodes accounts for segment loading time during startup.

Backup and Disaster Recovery Planning

Metadata Backup Strategy

# Automated PostgreSQL backup kubectl create cronjob postgres-backup \ --image=postgres:15 \ --schedule="0 2 * * *" \ --restart=OnFailure \ -- pg_dump -h postgres-postgresql-hl -U postgres postgres > /backup/druid-metadata-$(date +%Y%m%d).sql

Deep Storage Backup Verification

# S3 backup verification job

apiVersion: batch/v1

kind: CronJob

metadata:

name: druid-backup-verification

spec:

schedule: "0 4 * * 0" # Weekly

jobTemplate:

spec:

template:

spec:

containers:

- name: backup-verify

image: minio/mc:latest

command: ["/bin/sh", "-c"]

args: ["mc mirror --dry-run s3/druid-segments s3/druid-segments-backup"]Integration with GitOps Workflow

Advanced FluxCD Configuration

Building on the GitOps foundation from Part 2, production Druid deployments benefit from sophisticated FluxCD configurations that handle complex dependency management and multi-cluster deployments.

The complete GitOps workflow integrates several FluxCD resources:

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: GitRepository

metadata:

name: druid-cluster-config

namespace: flux-system

spec:

interval: 5m0s

ref:

branch: main

secretRef:

name: flux-system

url: ssh://git@github.com/iunera/druid-cluster-config

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: druid-cluster

namespace: flux-system

spec:

interval: 10m0s

path: ./kubernetes/druid/druidcluster

prune: true

sourceRef:

kind: GitRepository

name: druid-cluster-config

dependsOn:

- name: druid-operator

- name: druid-secretsConfiguration Drift Detection

GitOps provides automatic detection of configuration drift—when running configurations diverge from the Git repository state:

spec: interval: 5m0s # Check for drift every 5 minutes prune: true # Remove resources not in Git force: true # Override manual changes

This configuration ensures your production Druid cluster always matches the declared state in your Git repository.

Multi-Cluster Deployment Patterns

Enterprise environments often require deploying Druid across multiple Kubernetes clusters for high availability or geographic distribution:

# Cluster-specific kustomization

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: druid-us-east-1

namespace: flux-system

spec:

path: ./clusters/us-east-1/druid

postBuild:

substitute:

cluster_region: "us-east-1"

s3_endpoint: "s3.us-east-1.amazonaws.com"Rollback Strategies and Version Control

GitOps enables sophisticated rollback strategies through Git operations:

# Immediate rollback to previous commit git revert HEAD --no-edit git push origin main # Rollback to specific version git reset --hard <commit-hash> git push --force-with-lease origin main

FluxCD automatically detects these changes and applies the rollback to your Kubernetes cluster.

Advanced Integration with Conversational AI

For enterprises leveraging advanced analytics capabilities, consider integrating with Apache Druid MCP server for conversational AI capabilities. This integration enables natural language querying of your time series data, making Druid accessible to non-technical stakeholders.

Deployment Patterns Comparison

| Pattern | Traditional | Blue-Green | Canary | Rolling |

|---|---|---|---|---|

| Downtime | High | None | Minimal | None |

| Risk | High | Low | Very Low | Medium |

| Resource Usage | Low | High | Medium | Low |

| Complexity | Low | Medium | High | Low |

| Rollback Speed | Slow | Instant | Fast | Medium |

| Testing Capability | Limited | Full | Partial | Limited |

Frequently Asked Questions

What are the advantages of MM-less Druid deployment?

You eliminate always-on MiddleManagers, run each indexing task as its own Kubernetes Pod/Job, right‑size resources per task, improve isolation and observability, and unlock native autoscaling. Troubleshooting is also simpler because each task has its own pod logs and lifecycle.

Do I still need Zookeeper?

No—when you enable Kubernetes-native discovery (druid.discovery.type=k8s) and disable ZK (druid.zk.service.enabled=false), Druid uses the Kubernetes API for discovery/announcements. Ensure the druid-kubernetes-extensions is loaded and RBAC for Pods/Endpoints/Services/Leases is in place (see “Zookeeper-less Installation”).

How should I size resources for production?

Start from the guidance in this article: Brokers 16–32Gi/4–8 CPU, Historicals 32–64Gi with 200–500Gi ephemeral for segment cache, Coordinators 8–16Gi/2–4 CPU, Overlord 2–4Gi/1–2 CPU in MM‑less setups. Adjust based on query concurrency, segment size, and MSQ usage; validate with load tests.

How do I configure TLS with Java keystores?

Mount keystores at /druid/jks, pass passwords via Secrets/env, set client/server TLS properties in common.runtime.properties, and disable plaintext ports (druid.enablePlaintextPort=false). Use TLSv1.2+ and approved cipher suites. Update probes and Services to use HTTPS schemes/ports.

What’s the recommended Backup/DR approach?

Back up the metadata store (e.g., PostgreSQL CronJobs), verify deep storage replication, and run periodic restore drills. Automate verification jobs (e.g., S3 mirror dry‑runs) and keep encryption keys separate. Use GitOps history for deterministic rollbacks.

How can I troubleshoot task execution in MM-less mode?

Check Overlord logs for scheduling and Kubernetes runner messages, verify service account RBAC, and inspect individual task pods with kubectl logs/kubectl describe. Confirm the task template ConfigMap is mounted and the customTemplateAdapter path is correct.

Which production metrics matter most?

Query latency/throughput, task success rate, segment loading/dropping, JVM heap and direct memory, cache hit rate, disk usage for segment cache, and inter-component network I/O. Alert on query failures, slow segment loading, and resource saturation.

How do I rotate certificates without downtime?

Update the TLS Secret, trigger rolling restarts, and rely on readiness/liveness probes using HTTPS to validate. Consider cert‑manager for automated renewals. Use GitOps so rotations are auditable and revertible.

How do I migrate from an existing Zookeeper/MM cluster to Kubernetes‑native?

Stage a parallel cluster with K8s discovery and MM‑less runner, mirror ingestion, validate queries/latency, then flip traffic using blue‑green or router/service selector switches. Keep deep storage shared (or replicate) to minimize re‑ingestion when feasible.

Is pac4j required?

No. This guide uses pac4j (OIDC) for human SSO and basic auth for service users, but you can start with basic auth/escalator only and add pac4j later. Authorization is handled via the basic authorizer in this setup.

Conclusion

You now have a production‑grade, TLS‑only, MM‑less Apache Druid cluster running natively on Kubernetes and managed via GitOps. The patterns in this guide—Kubernetes service discovery, pod‑templated task execution, hardened RBAC, and environment‑based configuration—give you a repeatable, auditable path from dev to prod.

Go‑live checklist

- TLS everywhere with secret rotation procedure validated

- RBAC and service accounts least‑privilege, including task pods

- Health/readiness probes using HTTPS and realistic initial delays

- S3 deep storage and index logs configured with lifecycle/retention

- Backups and a restore drill completed for the metadata store

- Blue‑green or rollback plan documented and tested

- Dashboards and alerts for query latency, task success, segment loading, JVM/direct memory, and cache hit rate

What’s next

In the next part of the series we implement authentication and authorization, combining pac4j (OIDC) for human users with Druid’s basic authorizer for service‑to‑service access, and we harden roles, users, and secret management to meet enterprise requirements.